Titration is a process commonly used to quantify a substance in a solution. One of the more popular analytical techniques, you may remember performing titration experiments at school. But this simple process is precise enough to be used in a wide range of industrial applications. Most notably, titration is used in the pharmaceutical industry to manufacture products like medicines.

In this post:

What Does Titration Mean?

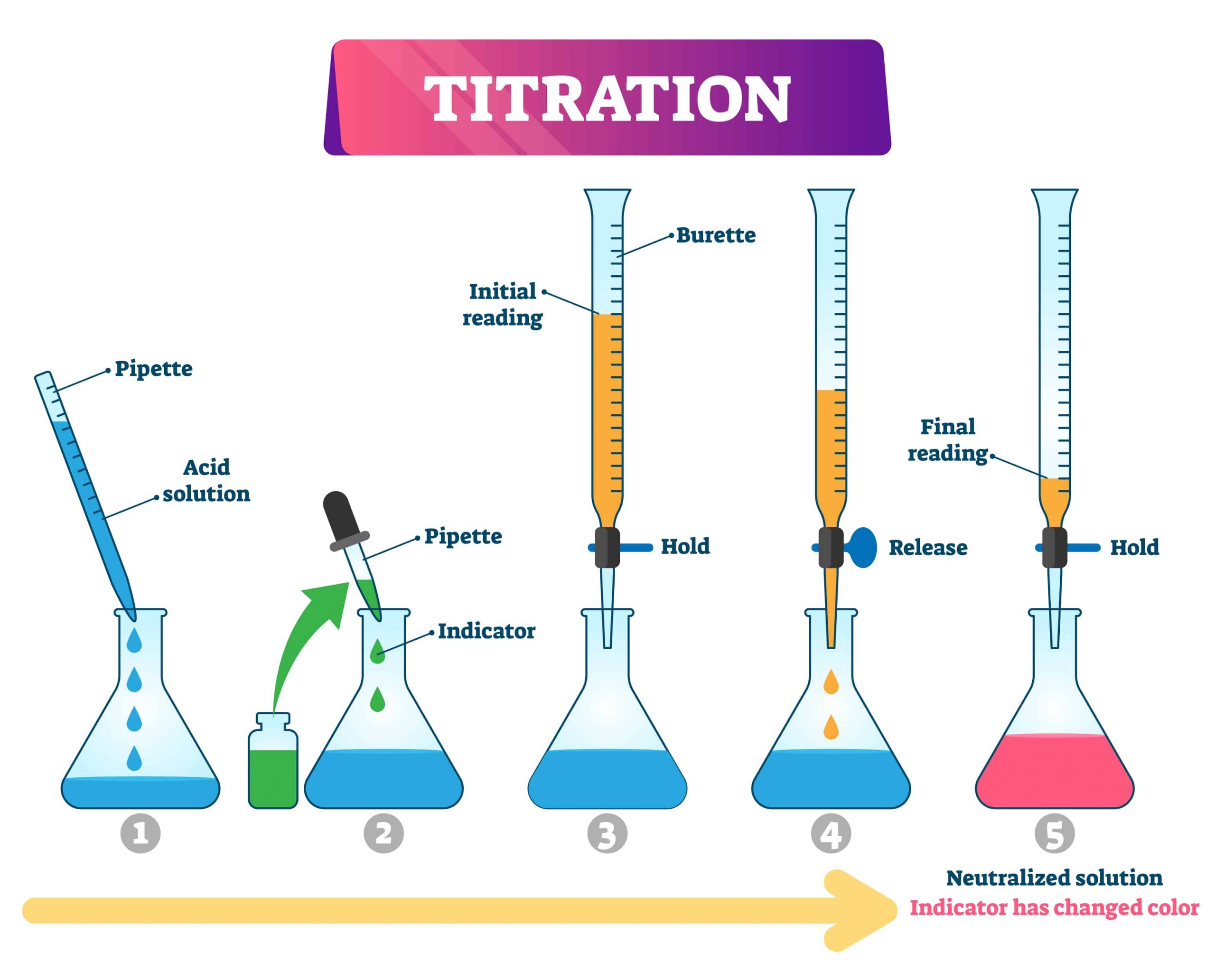

Titration is a common technique used in analytical chemistry to determine the concentration of an unknown solution by gradually adding a solution with a known concentration. The reactant of known concentration is added bit by bit until neutralisation is achieved.

Neutralisation is usually indicated by a change in the colour of the solution, especially when an indicator is used. A chemical indicator is added to the unknown analyte mixture and is used to signal the end of the titration.

For example, when carrying out an acid-base titration, the indicator will change colour when the solution reaches a neutral pH. For a more precise titration process, a measuring device such as a pH meter can be used to determine the point of neutralisation.

Most titration experiments are intended to accurately determine the molar proportions necessary for an acidic solution to neutralise an alkaline solution, or vice versa. That said, titrations can be classified into different categories based on the types of reactants and the end product:

- Acid–base titration

Acid-base is the most common and well-known type of titration. The reactants are acids and bases, and the final products are salts. The main goal here is to determine the concentration of either the base or the acid. The known reactants are in one solution, which is added to the unknown solution in order to figure out the concentration of the analyte.

Visual cues, like colour change and the formation of precipitates, are used to indicate when neutralisation has been achieved. Indicators like litmus, methyl violet, and phenolphthalein are commonly used to determine the endpoint of acid-base titrations. Depending on the type of indicator, an endpoint is reached when the colour of the indicator changes.

For example, when bromothymol blue changes from yellow to blue, the acid-base titration has reached an endpoint. However, it must be noted that the endpoint of titration isn’t always exactly the same as the equivalence point. The latter is determined by the stoichiometry of the reaction.

- Redox titration

As the name implies, this type of titration involves an oxidation-reduction reaction. It involves the transfer of electrons from one chemical species to the other. The reducing agent donates electrons, while the oxidising chemical receives the electrons.

One good example of a redox reaction is a thermite reaction. For example, this type of reaction happens when the iron atoms in a ferric oxide compound lose oxygen atoms to combine with aluminium atoms, forming Al2O3.

To determine the endpoint of a redox reaction, a potentiometer – or type of resistor – is used. If a potentiometer isn’t available, colour changes in the analyte can also be used as reference. Similarly, in some redox titration tests, chemical indicators aren’t necessary, but the reactants themselves may change colour.

For example, if you use potassium permanganate as an oxidizing agent, a slight persisting colour is an indicator of titration endpoint. Similarly, if you use iodine as an oxidising agent, the endpoint is reached when the deep brown triiodide ion disappears.

The colour changes in the reactants, however, may not be sufficient to indicate the endpoint. For instance, if the oxidising agent is potassium dichromate, the reactant may change from orange to green, but this isn’t a definite sign that the titration has ended. An indicator like sodium diphenylamine would need to be added to yield a definite result.

Wine is one of the most common commercial substances that requires analysis using the redox titration method. For instance, if you want to know if sulfur dioxide is present in wine, you can use iodine as an oxidising agent and starch as an indicator. Once a blue colour appears, caused by a starch-iodine complex formation, it signals the endpoint of titration.

Many titration experiments are necessary in the pharmaceutical industry when analysing substances, including illicit drugs. In this industry, titrations are crucial in determining the correct balance of medicine formulas.

- Gas phase titration

This is a type of titration carried out on the gas phase of substances. It’s used to analyse a reactive gas by allowing it to react with a known species and concentration of gas. Gas phase titrations are advantageous compared to normal spectroscopy analysis because they’re not dependent on the path length.

- Complexometric titration

This is a type of titration that’s reliant on the formation of a complex between the reactants. It requires specialised complexometric indicators that form weak complexes when combined with the analyte. The iodine-starch complex formation we mentioned earlier is a common example. Other common indicators used for this type of titration are Eriochrome Black T and EDTA for titrating metal ions in a solution, particularly calcium and magnesium ions.

- Zeta potential titration

Instead of using chemical indicators, a zeta potential titration is monitored using the zeta potential. This method can be applied to characterise heterogeneous systems, like colloids. The zeta potential is an electrical potential at the slipping plane or the interface between a mobile fluid and a fluid that is attached to a surface. It can be used to determine the optimum dose for flocculation. This method is also applicable in designing suspension drugs.

- Assays

Assays are very useful as a laboratory testing method for biological samples. They’re a type of titration that determines the concentration of viruses or bacteria in a sample. Serial dilutions in a sample are performed with this technique.

How Does Titration Work?

Scientists and laboratory technicians use these different types of titration for a wide variety of purposes, such as developing medicines and diagnosing diseases. However, the main principle behind titrations is the same: it’s about achieving chemical balance, neutrality, or a certain level of concentration.

In all cases, this is done through the gradual addition of a solution with a known concentration to a solution with an unknown concentration. For example, bacterial assays are considered titrations because of the gradual dilution process involved.

Typically, chemical indicators are used to determine the endpoint of titrations, but this can also be chemically computed based on certain parameters like molar mass. Precision instruments like pH meters and calorimeters can also be used for the purpose of determining the endpoint.

What is Titration in Medicine?

In medicine, titration is a method of limiting the possible adverse reactions of the body to drugs. This is important because everyone responds differently to pharmaceutical drugs, depending on their age, comorbidity, weight, allergies, immunity, and general biochemistry. All of these are important factors to consider when administering certain types of medicines. This is where titration in medicine is applied.

Using titration, a doctor may gradually adjust the dosage of a prescription to achieve the optimal desired results for a given patient. The process may take two weeks of adjusting the dosage until the maximum effective dose is achieved with very minimal side effects, or no side effects at all.

Titration Pharmaceutical Methods

Titrations in the pharmaceutical industry are primarily used for analysis, product development, and quality control. Many of the previously mentioned titration methods are used in the pharmaceutical industry, but some are more useful than others. Here are three of the most common titration methods used in the pharmaceutical industry:

- Purity analysis

Medicines have effective dose levels that need to be accurately and precisely calibrated for each batch of pills, capsules or liquid medicines. Other pharmaceutical products, like vitamin supplements, also need a similar level of precision and accuracy in terms of dosage. An active ingredient of a drug can be subjected to titration using an organic and non-reactive solvent.

- Content analysis

Redox titration can be used to analyse the content of an unknown sample of drug or substance. This is particularly useful in preparing non-active drug ingredients, such as preservatives. Testing the oxidative resistance of these ingredients is crucial in ensuring the longevity of the drugs’ shelflife.

- Karl Fischer water/moisture determination

Determining the moisture content of pharmaceutical products is very important in the shelflife of the products. The water content must be just the right amount for the optimal storage of the products.

Two Karl Fischer methods of moisture detection can be used to determine the moisture content in a sample: the Coulometric Karl Fischer method is used to detect small amounts of moisture, while the Volumetric Karl Fischer method is used when samples have a moisture content higher than 1-2%, or when they contain ketones and or aldehydes.